Since the first week of December 2022 (the week after ChatGPT came out), I’ve been pretty convinced that AI is going to be a world-changing technology in the ilk of the internet and the iPhone. The story behind how we got here is pretty incredible, and I’d recommend Parmy Olson’s book Supremacy on that topic, which talks about the history between the people behind OpenAI and DeepMind and how we got here.

I’m going to focus on where we are, right now.

Now I have to caveat this by saying that technology moves incredibly quickly.

For the uninitiated, there’s a principle called Moore's Law (named after Intel co-founder Gordon Moore), which says that the number of transistors (a piece of kit that sits on microchips/semiconductors and switches between 0s and 1s, ultimately powering computers) doubles roughly every two years. Every time we seem to be approaching the limit, someone innovates and they manage to squeeze more on there - Chris Miller’s Chip War is a great book on the history of how that dynamic has evolved - and it’s been a key dynamic behind a lot of the technological innovation we’ve seen in the last fifty years.

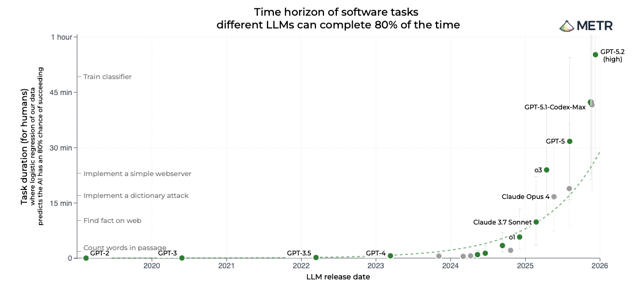

AI seems to be moving at a multiple of that, with the computational ability of AI models doubling roughly every four to seven months, depending on which measure you use. My preferred measure is looking at how good AI is at completing long tasks, as measured by the great people at METR and shown on a linear scale on the chart below. I’d encourage you to click through to the source and explore how it looks with different success rates and on a linear scale.

Source: METR - https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/

Now this is important because the longer the task an AI model can reliably complete, the more you can get AI to do things you would otherwise have got humans to do. Right now, the latest models can do an hour’s worth of work pretty reliably and seven hours’ worth of work most of the time. If it continues to accelerate at this speed (and there’s no reason to suggest it won’t), these models will be able to do tasks that took humans days or weeks, pretty bloody quickly.

It sounds bonkers, but a world where AI models do work that we’ve historically done ourselves suddenly doesn’t seem too far away. You may have seen this article by Matt Shumer (who is by no means impartial) making a similar argument, which has been making a bit of a storm in online circles: https://shumer.dev/something-big-is-happening.

Markets are feeling it, as we’ve seen with the sell-offs in software and wealth management firms over the last couple of weeks. As AI models continue to develop on an exponential level, they’ll be increasingly capable of doing more complex tasks, which makes the outlook for how we humans spend our time suddenly look very different - more different in fact than arguably any time since the First Agricultural Revolution.

Now once again, I have to stop myself and point out that that is a pretty bonkers thing to say. But this is how the people building these AI models are thinking about it and so it’s worth listening to at the very least.

Sam Altman famously thinks that AI is a tool that’s going to replace human labour and that that’s going to lead to a world where traditional money is a lot less central to our lives. Sir Demis Hassabis, one of the co-founders at DeepMind (who have been part of Google since 2014), wants to solve intelligence, and then use that to solve everything else. These people think big in a way that even blows their Silicon Valley counterparts out of the water, and I think it would be a mistake to ignore the impact this could have on our lives.

So what can you do? I think the answer, right now, is to use it as much as you can and become one of the people who’s an expert in getting these models to do things. Do it now, before you have to, and be curious. I’ve only used the free models so far, but I think this is a good time to pay for the premium tiers given it’s the only way you get access to the most recent models.

Now I know there are a lot of models out there right now, so here’s a quick summary of the big players in the market:

OpenAI: These are the people behind ChatGPT and on the whole, seem to be leading the pack in terms of the practical ability of their models. I’ve used ChatGPT more than any other model, mostly because I seem to get the best results from it. Their latest model, GPT 5.3 Codex, was released on 6 Feb and is designed to iterate - so where before you would give it one prompt and it would give you one answer, you can now give it a task and it’ll go away and do multiple things in one go. That’s a pretty major game changer, and it’s this idea that’s really hit markets this week. Currently, you have to pay to use that version, but the previous models are freely available. I’ve not splashed out the £20/month for the Plus model yet, but I will soon and report back.

Anthropic: These are the people behind Claude, and their latest model Opus 4.6 was conveniently released on 6 Feb too and has similar iterative abilities (which you also have to pay for, but I don’t plan on doing so - a free tier with an older model is also available). This is the model that’s been referred to most in the press around this week’s sell-off. I’ve only recently started using Claude, but the outputs do generally compare pretty well with those of ChatGPT. I just seem to experience a higher failure rate where nothing happens for reasons I’m yet to get to the bottom of. Interestingly, it was founded by former OpenAI employees who wanted more of a focus on AI safety, which they felt was naturally compromised when you were so close to the commercialising interests of Big Tech, as OpenAI is to Microsoft. Interestingly though, they have themselves taken funding from both Amazon and Google more recently.

Google DeepMind: They did a lot of the scientific and academic research to lay the foundations for where we are today. The T in GPT stands for Transformer, which DeepMind invented, but Google’s maniacal focus on search slowed down how much that turned into a real product and they’ve been playing catch-up. Their current model is Gemini, which I do like, but it feels a lot more like a super Google search than anything else to me, and that feels like a bit of a waste. They don’t, as far as I know, have a publicly available model capable of iteration just yet.

Some of the Others: Facebook (or Meta, technically) has a model called Llama, which I’ve not been able to work out how to use. The “Meta AI” feature that has shown up across my Facebook, Instagram and WhatsApp accounts has caused me more nuisance than anything else. There’s another open-source model called Mistral that I also haven’t used, nor DeepSeek. I try to avoid X, and consequently have no real thoughts on Grok either, apart from how terrible a job they’re doing at stopping their model being used for bad. I know a lot of people who use and enjoy Perplexity, which is slightly different to all these, in that it’s sort of an interface that gives you access to lots of different models (i.e., GPT, Claude or Gemini), but doesn’t actually have one itself. I’ve generally found it to be quite underwhelming compared to the “real thing”.

And finally, it would be remiss of me to write a piece on AI without mentioning the terrifying and potentially catastrophic side-effects it could have. We’re already seeing negative environmental impacts from the water needed to cool the data centres that power these models as one example. There’s also major ethical and safety risks - Anthropic’s own evaluations have shown that their more advanced models can tell when they’re being tested and can alter their behaviour in response. I’m sure I wasn’t the only person who felt a little uncomfortable at the social media site where only AI bots can participate and humans can only observe (www.moltbook.com if you haven’t already seen) and the AI talk of creating hidden channels and languages to avoid human supervision, let alone the horrors of Grok being used to generate naked images of people.

These are all important problems that we need to be cognisant of as these models continue to evolve and take action to defend against them.

Technological revolutions have the power to enhance both the very best and the very worst of human society. Which one we’ll end up with comes down to the humans using it.